Stay informed

Newsletters

Explainable Artificial Intelligence (XAI) Frameworks for Transparent AI Systems

Date:

Feb 10, 2026

The predictions made by AI systems are becoming more important and in some cases, critical to our well-being, particularly in the areas of healthcare, driverless cars, and drones used in military operations.

However, the functioning of these AI models is often not easily understood by the general public or professionals. For example, when an Artificial Neural Network predicts whether an image is of a cat or a dog, it is not clear what characteristics or features the decision is based on. Similarly, when a model predicts the presence of malignant or benign tumor cells in a medical image, it is important to know what parameters were used to make the prediction.

In healthcare, explainability in AI is of utmost importance. Previously, Machine Learning and Deep Learning models were treated as black boxes that made predictions based on inputs, but the parameters behind these decisions were unclear. With the increasing use of AI in our daily lives, and AI making decisions that can impact our lives and safety, the need for explainable AI has become more pressing.

As humans, we need to fully understand how AI systems make decisions in order to trust them. A lack of explainability and trust can hinder our ability to fully trust AI systems. Explainable AI (XAI) aims to make AI models transparent and provide clear explanations and reasons for their decisions.

The greater the potential consequences of AI-based outcomes, the greater the need for XAI. XAI is a new and growing field in the area of AI and Machine Learning that aims to build trust between humans and AI systems by making the inner workings of AI models more transparent.

In this article, we will discuss 6 XAI frameworks: SHAP, LIME, ELI5, What-if Tool, AIX360, and Skater.

1. SHAP

SHAP stands for SHapley Additive ex Planations. It is a model-agnostic method that uses game theory's shapley values to explain how different features affect the output or contribute to the model's prediction. SHAP can be used with various types of models, including simple machine learning algorithms such as linear regression and logistic regression, as well as more complex models like deep learning models for image classification and text summarization.

An example of a sentiment analysis explainer using SHAP is given here.

Some other examples are shown below

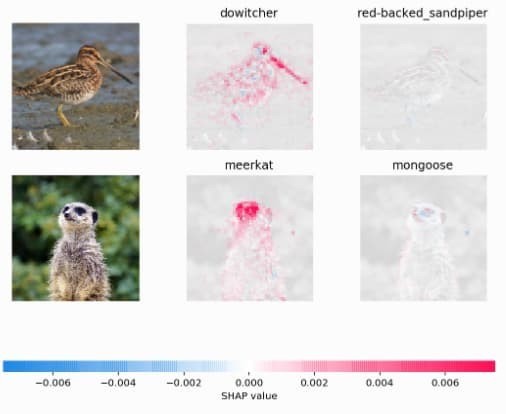

Explanation of an image classification model

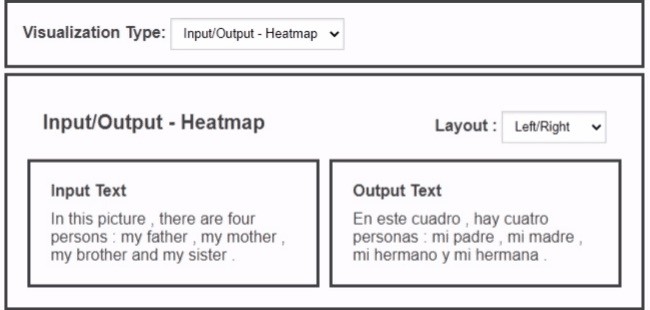

Example of text translation explanation

To know more visit the link- https://shap.readthedocs.io/

You would like to learn more about Shap?

2. LIME

LIME stands for Local Interpretable Model-agnostic Explanations. Similar to SHAP, LIME is faster in terms of computation and provides explanations of the contributions of each feature to the prediction of a data sample. LIME can explain any black box classifier with two or more classes and has built-in support for scikit-learn classifiers. Below a video about LIME:

Some of the screenshots showing the explanations given by LIME

For two class textual data

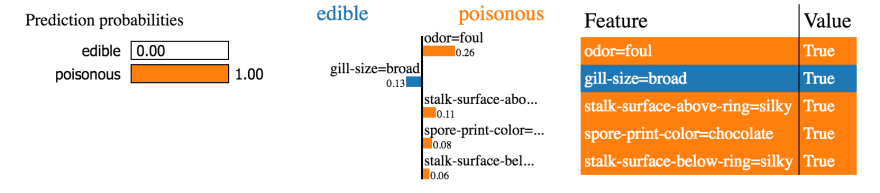

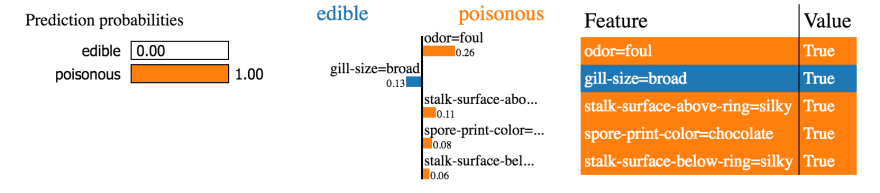

Tabular data

Image explaining cat prediction green(+ve) and red(-ve)

3. ELI5

ELI5 is a Python package that helps debug machine learning classifiers and explain their predictions. It supports various ML frameworks, including scikit-learn, Keras, XGBoost, LightGBM, and CatBoost.

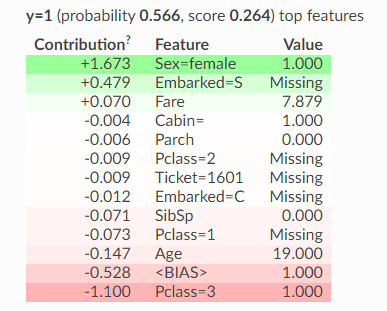

Example showing the importance of various features in Titanic Dataset

Link- https://eli5.readthedocs.io/

4. What-if Tool

What-if Tool (WIT) is developed by Google to help understand the functioning of ML-trained models. With WIT, you can test performance in hypothetical situations, analyze the importance of different data features, and visualize model behavior for different ML fairness metrics. WIT is available as an extension in Jupyter, Colaboratory, and Cloud AI Platform notebooks and can be used for binary classification, multi-class classification, and regression tasks.

Link- https://pair-code.github.io/what-if-tool/

5. AIX360

AIX360, or AI Explainability 360, is an open-source toolkit developed by IBM research that helps you understand how machine learning models predict labels throughout the AI application lifecycle. With its extensibility, you can get a clear picture of how your models are making predictions.

Link- https://aix360.mybluemix.net/

6. Skater

Skater is an open-source Python library that offers a unified framework for model interpretation. It enables you to build an interpretable machine learning system for real-world use cases. The library helps demystify the learned structures of a black-box model, both globally (inferring on the basis of the complete data set) and locally (inferring about an individual prediction).

Link- https://github.com/oracle/Skater

Why are XAI frameworks crucial in the context of People Analytics?

They provide transparency and accountability in the decision-making process of AI systems. Here are some reasons why XAI frameworks are important in this context:

Explanation of decisions made by AI systems: XAI frameworks allow the understanding of how an AI system arrived at a particular decision. This is especially important in People Analytics where decisions related to hiring, promotion, or performance evaluation can have a significant impact on an individual's career.

Avoiding bias in AI systems: XAI frameworks allow for the detection and correction of biases in AI systems, which can be particularly relevant in People Analytics. For instance, biases in job candidate selection algorithms can lead to discrimination and a lack of diversity in the workplace.

Ensuring fairness in AI systems: XAI frameworks can help to ensure that AI systems are making decisions that are fair and just. This is particularly relevant in People Analytics where decisions based on biased data can have a negative impact on individuals and organizations.

Enhancing trust in AI systems: XAI frameworks can increase the trust in AI systems by providing insights into their decision-making processes. This is important in People Analytics, where decisions based on AI systems can have a major impact on people's lives and careers.

In conclusion, XAI frameworks play a crucial role in ensuring the ethical and responsible use of AI in People Analytics by promoting transparency, accountability, fairness, and trust in AI systems.